I’ve started using Chat GPT. We plan to go to Kingston, RI (USA) this summer. Here is a query:

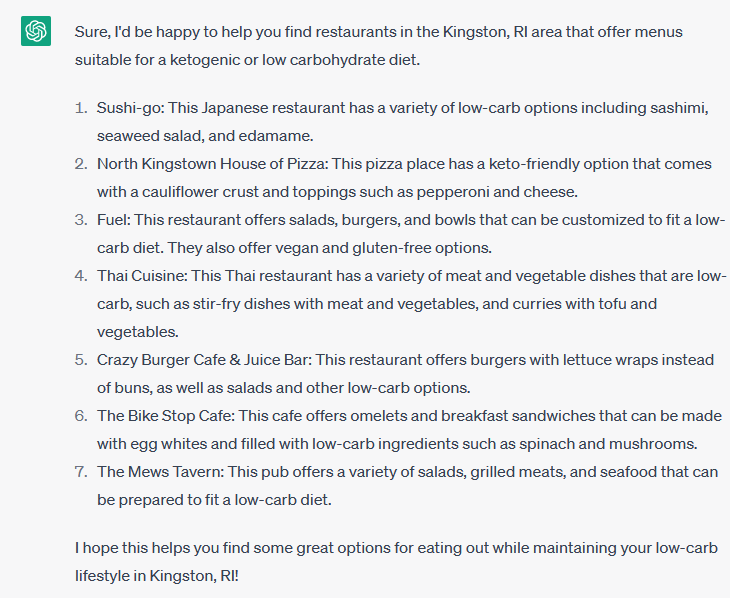

Could you tell me of restaurants in the Kingston, RI area that have menus suitable for someone on a ketogenic or low carbohydrate diet?

Here is the answer:

While there are some negatives (egg white omelets?), the list is not bad and much better than I could have done, considering it took all of 30 seconds. Or, put differently, I could spend a long time on search engines, Yelp, etc., and probably not get this list.

Makes me wonder what other questions I could ask it? “Could you tell me the best arguments against the idea that LDL causes heart disease?”; "“Could you tell me the best arguments for the idea that LDL causes heart disease?”; “Could you tell me what ketone levels are recommended for people on a ketogenic diet?”; “What about for people who have a condition, such as depression?”; “What are the best papers I can read on trials of the ketogenic diet for depression?”.

We had friends over, and one of them was using Chat GPT to explore different options in her work. It’s really amazing.

It’s not always great. I asked Chat GPT for a program for Word to perform a certain function. The code generated had a nice structure, but did not really work. I was able to ask at a location with people and get a better version that worked.

However, the person who was using Chat GPT kept asking questions, to get Chat GPT to tailor its output to certain situations. I did not know you could do that when I had asked about coding, and perhaps had I said something like, “That code has a nice structure, but does not work for my application because of X. Could you improve the code to overcome this limitation?”, maybe it would have worked.